Artis

Mixed Reality Experience

- Team Size: 3 Developers and 6 Artists

- Development Time: 10 weeks

- Engine: Unreal Engine

- Platform: Mixed Reality

- Status: In progress

- My Role: Lead Developer

Description

Artis Mixed Reality Experience

This is a story through space where you realize how important we are to the universe and how connected we actually are. You will hear a story and be able to interact with atoms and potentially much more.

What did I do?

I was the Lead developer of this project and worked on multiple features that needed to be done for this project like a framerate, rotation components and more. Another big responsibility that I had was being mostly in charge on making sure a build is being made to either test a new feature or have one to test inside Artis and to have a good framerate on a standalone version on the Quest 3.

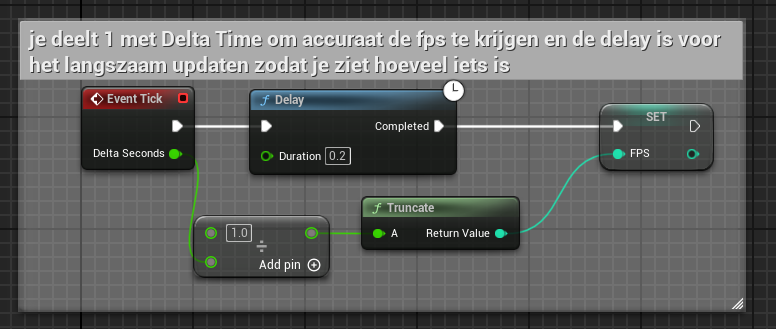

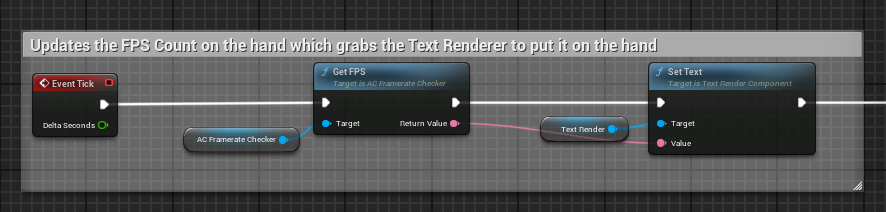

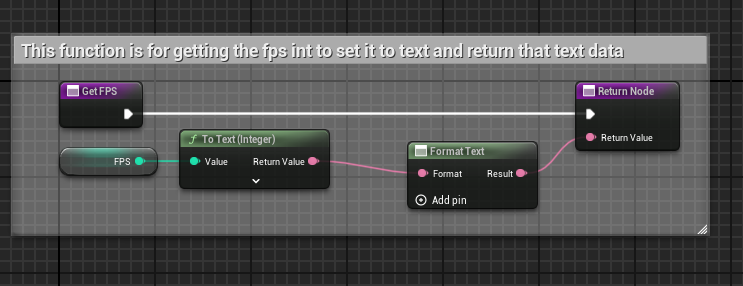

Framerate Checker

We needed to check the fps in a standalone version of the project that ran on the headset only and this was used to track if everything was performant and doesn't cause issues. This is how I did it by connecting the Text value that was made by setting the FPS(Number) to text and putting it on a Text Block(Text Renderer) on the hand

Spatial Anchors

The Spatial Anchors are points into your world that stay there to correctly place the map so that the multiple people that are playing see the same thing at the same time.

The Spatial Anchors were a feature that were made and added into a previous XRLab project and I was tasked to go through the code and implement them into this project and replace and add the correct references so it works properly.

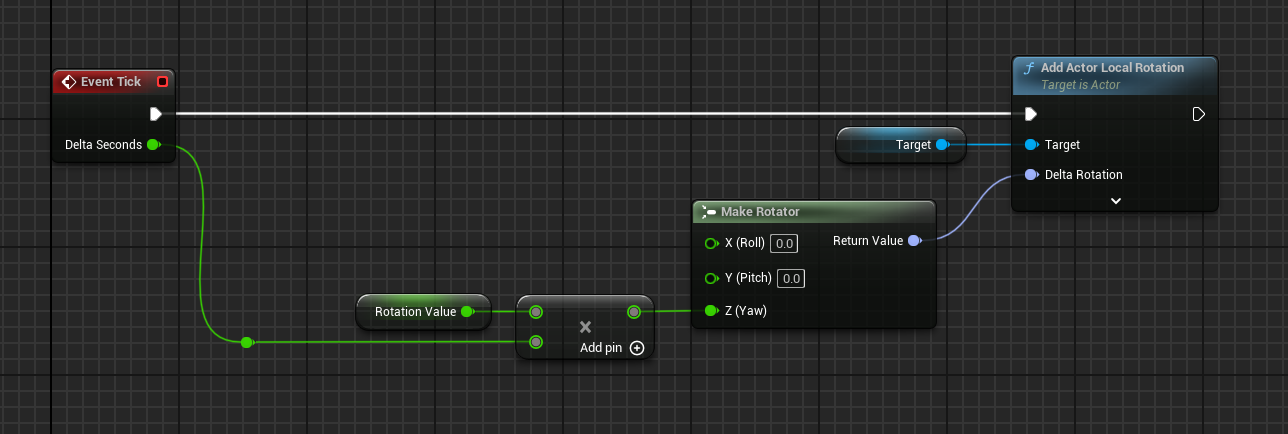

Planet Rotation

To make an object or planet rotate without copying nodes to every object i made an Actor Component that i could just put on all objects to make them do the same thing with different values. In this Component i made a variable to change the speed of the rotation so that it can be changed for each planet if necessary

All that was needed was to rotate the object into 1 of the Axis to spin it around.

Handtracking

Since the audience dont need the controller, I thought it would be smart to add handtracking so you could still be able to interact with objects that can be grabbed

Grabbing with Handtracking

In the gif above you see my handtracking hands grab a small cube and me also letting it go. It took me research and a long search to get to grabbing an item. In the template vr pawn i changed the function of grabbing to accept the XRHands and not a motiongrip so i could then check if both fingers hit the same object and if that is true then the grabbing happens

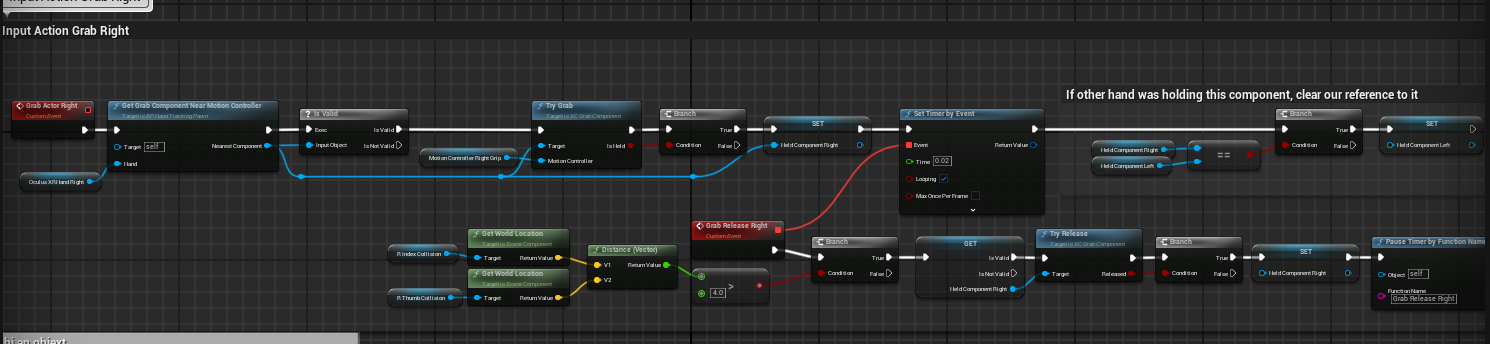

This is a reworked version of the VRTemplate Hand Input where i changed the MotionGrip to the XRhands so it grabs the new input field

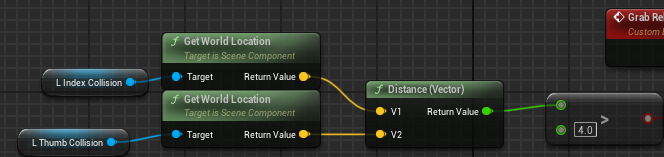

This is required to check if the object is in your hand. It looks at a small distance and if its bigger than it then it lets the object go.

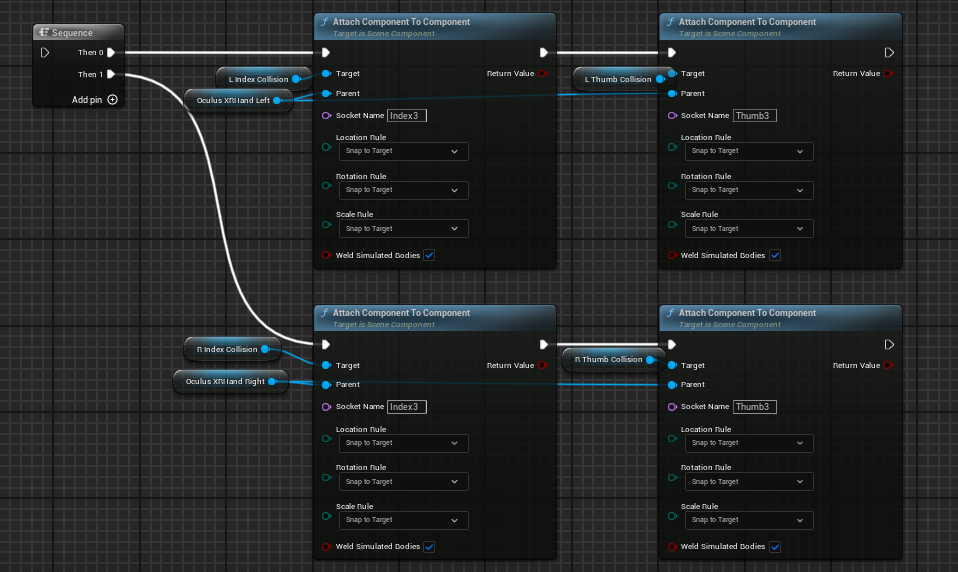

To actually be able to grab an object I needed to put Collsion points on the Index Finger and the Thumb and I did it by attaching them to specific points of the hand so I didnt have to place if manually

Interactable Objects

For this to work i had to change the Grab Component Blueprint and make it my own to put on the objects that needed to be grabbed and only if the match with the referenced Grab Component in the VRPawn they would be able to grab an object, like a cube, planet or an atom.

Planning

We used Codecks to track our tasks that needed to be done and divided them between the Sprints that we had